Hopper receives faster memory and a performance increase

Last year, Nvidia launched the 4nm H100 accelerator with Hopper architecture. It has since been the company’s fastest GPU for AI. Now the company is launching its successor dubbed H200. It isn’t quite a new generation yet, but something of a refresh that will lead Nvidia’s lineup until the next generation with the Blackwell architecture is released. The H200 relies on the use of faster memory, but that should also lift overall performance.

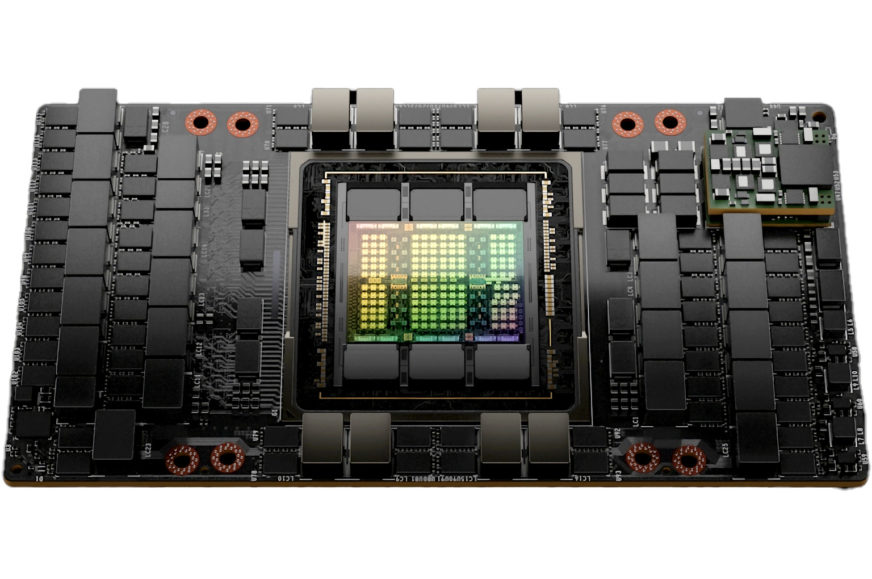

The H200 accelerator should use the same 4nm Hopper chip with 80 billion transistors as the H100, and also probably the same mezzanine form factor. What is new, however, is the HBM3E memory, which the GPU should apparently be the first on the market to use. This memory provides a capacity of 141GB, which is an unusually irregular number – apparently it should be 144GB made up of six 24GB HBM3E packages, but 3GB are unavailable for some reason.

The question is if the GPU keeps the missing space reserved for some dedicated purpose, or if Nvidia, in cooperation with the manufacturers of this memory, can disable the individual DRAM layers in HBM3E packages (if these 24GB packages are eight layers stacks, then one DRAM layer would correspond exactly to 3 GB of capacity). This could salvage an HBM3E package with some defect that was found after it is mounted on the GPU, whereas normally the entire package would have to be deactivated at the cost of a significant loss of performance and graphics memory capacity.

Memory bandwidth reaches up to 4.8 TB/s, which with a 6144-bit bus with six packages means that the memory should run at about 6400 MHz (6.4 Gb/s per one bit of width) effective speed. For the H100, Nvidia claimed a bandwidth of 3 TB/s, so this should be an increase of up to 60%. This is not only due to the higher HBM3E clock speed, but also because the full 6144-bit interface is used, whereas the H100 only used 5120 bits – only five HBM3 packages out of six were active.

We don’t know if the clock speeds and number of compute units have increased. In the H100 version, the chip had 16,896 shaders (132 SMs) and 528 tensor cores enabled with a boost clock of around 1.83 GHz, giving a raw performance of 66.9 TFLOPS in FP32 operations and 33.5 TFLOPS in FP64. Using tensor cores and 8-bit precision, the theoretical performance should be approaching 2000 TOPS. The TDP of the original version was 700 W, again we don’t know yet if it has stayed the same.

Nvidia states that this new product can have up to 60% higher performance in GPT-3 inference with 175 billion parameters compared to H100, up to 90% higher performance in Llama2 inference with 70 billion parameters and in HPC simulation type computing it can be up to 2x faster, but this last figure is only comparing it against the 7nm Ampere A100, not H100. Beware though that these are just the vendor-provided benchmarks and may be selective and thus misleading. For example, if a company has selected those numbers where a task was previously severely slowed down due to not fitting into the available memory (while H200 removes the bottleneck for them), this resulting speedup will not represent tasks that were not previously capacity limited.

The H200 will be produced as a standalone mezzanine accelerator (whicht needs a special carrier motherboard, there is no information about a standard PCI Express version yet). Nvidia will also offer a version combined into one package with an ARM processor, named H200 Grace Hopper Superchip.

The Jupiter supercomputer at the Jülich Computing Centre in Germany is currently being built on these processors/GPUs. It will be an Eviden BullSequana XH300 cluster with just under 24,000 Grace Hopper Superchips. Its power draw is to be up to 18.2 MW and its performance in AI operations 90 EFLOPS or up to 1 EFLOPS in scientific computing (FP64). This could put this system in the “exascale” club.

Available in Q2 2024

As is the case with Nvidia’s compute GPUs (and other companies’ server products), the current unveiling is preliminary and real availability will come much later. In the case of the H200, it should come in the second quarter of 2024, when these accelerators will become available from manufacturers of servers and in cloud services. Nvidia itself will offer these GPUs (in quad or octal configurations) in its Nvidia HGX servers.

Source: Nvidia (1, 2) AnandTech

Jan Olšan, editor @ Cnews.cz

⠀